In This Article

Data observability is the ability of the data process to understand, manage, and diagnose the health and state of data across an organization’s data ecosystem.

Data observability is now popular in modern data architecture, but it has also become too complex and dynamic. The usage of AI and machine learning is common in data observability metrics. They improve the reliability of the data and increase the coverage of data quality monitoring metrics. Along with that, it improves the overall data health across the organization.

“Monitoring” may be the first word that comes to your mind when thinking of observability. But, in real terms, data observability is more than just monitoring your data. It’s more like a workflow in place that ensures the quick resolution of errors whenever they occur. Data observability acts as a remedial measure to strengthen your data quality standards and practices of data maintenance overall.

Components Of Data Observability

With the direct application of data observability, you can maintain the data health intact. However, you have to consider one thing that data “healthiness” is an umbrella term, and it holds many things together.

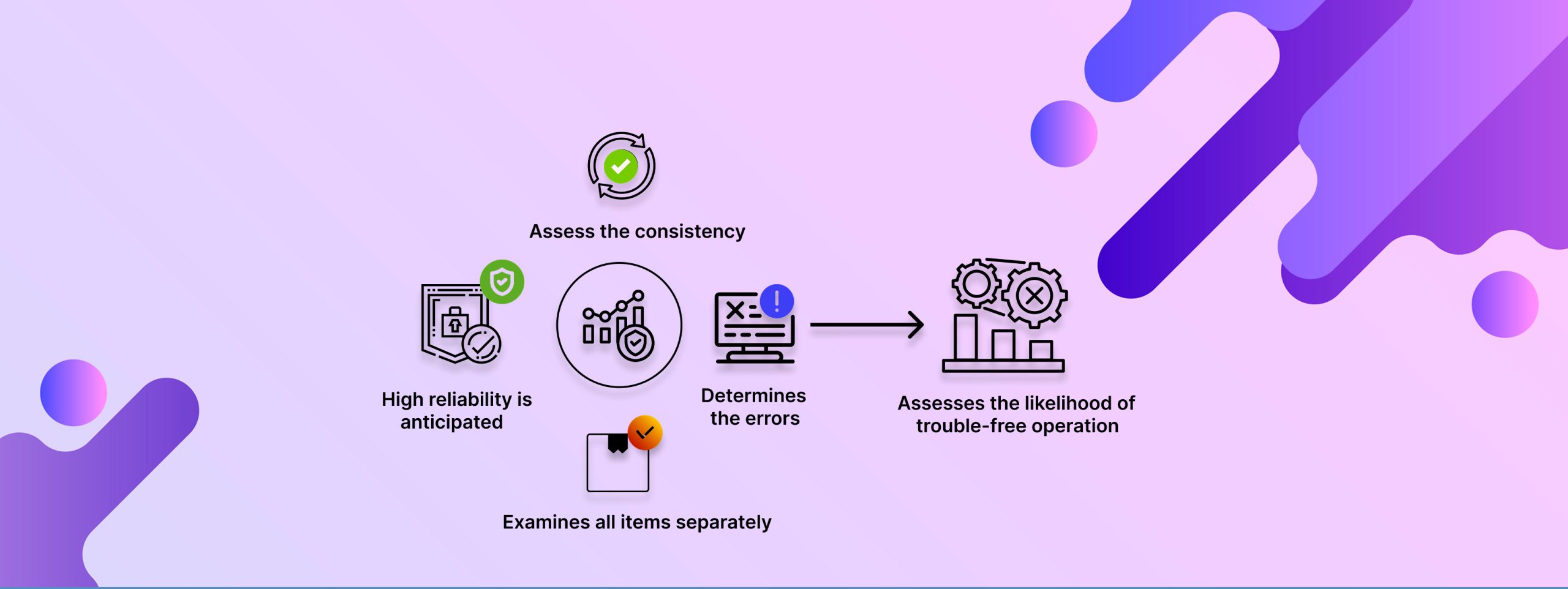

Notice that you can measure data observability using three parameters, which are: metrics, logs, and traces. These are the primary components of data observability. They provide insights into data, improve quality measures, and performance.

Metrics

Just as ‘metrics”, these are the indicators that provide quantifiable insights into data. These metrics reveal the data’s health and performance of the data throughout. You can get insights about data latency and error rates from here. Metrics help organizations or units of institutions where data plays a vital role in identifying anomalies. Besides identifying the errors, metrics also allow for prompt issue resolutions and bring the best to maintain data to high-quality standards.

Logs

Saving the historical information of data is important as it helps in many cases. Logs are mainly a detailed record of data events, interactions, and changes that are essential for upholding data quality. Organizations must maintain data logs, as these are the instruments to find data issues. For example, in financial organizations, transactions are maintained in the database as logs in chronological order. If anything is mistakenly done on one particular transaction, then it can be easily pointed out by checking the log order.

Traces

In a complex data environment, understanding where the data comes from and where it enters is extremely important. It helps organizations to comprehend how data moves across the data environment. Suppose a retail company uses a machine learning model for analyzing the customer journey. When it traces back the customer journey, it could sense where the data comes from. It’s the best way to keep the flow of your data on track. Tracking also aids in understanding the interdependence and co-existence between various data sources and systems.

Lenses Of Data Observability

Keeping the data measurement in check is one of the ways to maintain data quality. Data observability not only increases the data quality parameters but also makes data visible. Many times, organizations are unable to utilize their data completely. This kind of data is left untapped, which you can refer to as “dark data”. The percentage of dark data on average is 68% stored in any random organization. The relevance of data observability is high here to tap into this percentage of data.

Data observability comes in three core lenses, which are:

I) Data – Undermines the overall health of data and resolves quality issues. It also takes down anomalies and bottlenecks from the data flow.

II) Pipeline – It circles around monitoring and understanding the health of data pipelines. Plus, it identifies and removes performance issues and all kinds of capacity errors.

III) Business – it’s essential to notice how businesses consume data. Because it helps to address data flow challenges and develop a resolution to comply with the data matters.

How Data Observability Benefits Businesses

Data observability is supremely important to improve the quality of your data and improve the data flow. It helps in many ways to enhance data visibility. Let’s check how it adds benefits to your organization, too.

Makes Data Accessible and Reliable

Unmanaged data badly affects operations, especially when the data users cannot access to data. It produces a silo culture and subtle frustration over the system. Further, unmanaged data produces unnecessary data errors within the system. However, having data observability in the system makes the system watched or monitored. It reduces data mismatches and instills confidence within the data flow.

Moreover, data observability delivers a comprehensive view of data inside the organization’s data ecosystem. It mitigates data problems and helps you pinpoint data issues at the moment they happen. Plus, it also helps maintain a reliable flow of data within the organization and across channels.

Mitigate Data Issues Rapidly

The best thing about data observability is that it helps localize the sources where the issue occurred. Hence, you can put your best action plan there to come out of that situation or any other tough problem. Data observability can unlock hidden aspects like identifying unhealthy data and downtime. Overall, when you follow proper data monitoring, you can expect that your day-to-day operations run smoothly.

Identify and Remove Data Anomalies

At the surface level, all data processes may appear normal and healthy. When you dive deep into the system, you can notice there are plenty of problems that were hidden under the surface level. With the help of data observability, you can identify all the hidden problems and address them efficiently. It also means you can prevent the anomalies from entering your system. Your operations can run smoothly throughout the system.

Data observability is like a continuous watchdog as it scans through the data constantly to identify and eliminate errors. It’s more like mere monitoring the system, something like making your system alert and pushing it to take immediate action. If your organization is handling a huge amount of data, you need to take the matter of data observability seriously.