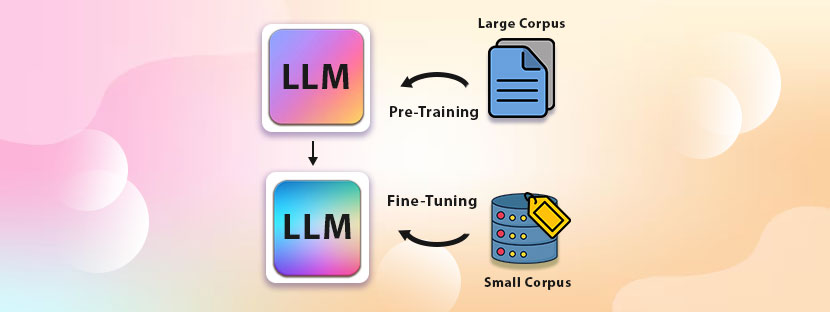

The outcomes of Large Language Models (LLMs) extensively depend on two critical processes;

- Pre-Training, &

- Fine-Tuning

With the application of both processes, AI systems can produce amazing results and also perform specific tasks. They make the system versatile and advanced to handle real-world complexities.

Pre-training works at the development stage during the time of preparing the model. On the other hand, fine-tuning comes into the picture after the development of the model. It makes the model very specific so that it can handle domain-specific tasks. We need a comprehensive understanding of both concepts to compare them.

Let’s first understand each concept in detail.

Pre-Training

Pretraining is a critical factor that builds the foundation of LLM models. In this process, the model is fed with data from a wide variety of sources to make it versatile. AI models like GPT series, BART, RoBERTa, etc, have been developed via pre-training data. It includes different methods and techniques, which can be simplified as;

- Masked Language Modeling

- Casual Language Modeling

- Sequence to Sequence Learning

In the context of NLP development, these methods help the AI system handle different tasks. Masked modeling trains the AI system to predict missing words and understand each context deeply. On the other hand, causal modeling predicts the next words in a sentence and generates coherent texts for the model. And lastly, sequence-to-sequence models handle both input and output tasks.

The development of a model that has a general understanding of language is possible with the involvement of pre-training. The models can handle downstream tasks well. Let’s check some of the core benefits of this process here;

→ Cut the Cost of Specialization: Pre-trained models eliminate the costs of training the AI system to perform specific tasks. It can make the AI system efficient enough to handle general tasks.

→ Reusable System: Pre-trained model is the foundation of AI development, and it prepares the AI system to handle fine-tuning and other measures.

However, because of technical complexities, the development of AI models with a pre-training database is expensive. Plus, it needs extensive support from the hardware system to develop such models. High-quality data is a fundamental need for LLM development, and it’s the only thing that can increase the quality of AI outcomes.

Fine-Tuning

After pre-training, the role of fine-tuning is to tune the AI system in order to perform some specific tasks. Fine-tuning is a specific model that refines pre-trained models to sharpen their outputs. Labeled data are being used to train machine models and optimize machine performance.

Fine-tuning is a comprehensive process, and it has different methods and techniques. Sentiment analysis, data classifications, image annotations, etc, are the processes included in this. All these techniques of fine-tuning are flexible and hence suitable for real-world applications. Here are the top-end benefits of fine-tuning;

→ Task-Specific Accuracy: Machines get accurately trained to handle a particular set of tasks using the fine-tuning method. Because of this, this technique is highly used in accomplishing domain-specific tasks.

→ Excellent Efficacy: Compared to pre-training models, fine-tuning requires less resources as compared to pre-training. So, it’s a less expensive way to get extraordinary machinery outputs.

However, like any other training procedure, fine-tuning techniques have some cons too. These techniques are suitable for machine training when large sets of data are involved, as they are not suitable for small datasets. On the other hand, fine-tuning has the capacity to erase the machine’s general knowledge that it acquired during pre-training.

Difference Between Pre Trained vs Fine Tune Techniques

| Aspects | Pre-Training | Fine-Tuning |

|---|---|---|

| Goal | Building machine learning foundation | Optimizing ML models to get task-specific performances |

| Capacity | Gigantic volume of data in a diverse range | Curated database in a small quantity |

| Cost to build/develop | High, it can take months to build | Comparatively low, fine-tuning of a model can be done within a few days (or maybe some hours) |

| Scalability | It covers multiple domains, as the applicability is high | Limited to handling some specific tasks |

| Used techniques | seq2seq, MLM, CLM, etc, techniques are used to pre-train the machine database. | Data annotation, semantic image segmentation, labeling data, etc., are used to fine-tune the machine database. |

Pre Trained vs Fine Tune: Which One is Better?

Choosing the right method is essential when it comes to training your machine to perform any specific tasks. The idea is very simple – if you’re developing a foundational AI or ML model, you need to consider pre-training. On the other hand, if you want your machine to handle specific tasks, you have to rely on fine-tuning. Let’s understand the situations when you know what to choose, pre-training or fine-tuning, here.

When Pre-Training You Need

Pre-training helps build AI models from scratch. It helps provide the AI model with the strength to perform a wide variety of tasks across domains. Plus, the complexity of developing AI models using pre-training is high. Here are a few factors that you need to consider if you’re choosing pre-training.

- Pre-training helps develop foundational models and can adapt to various ranges of tasks.

- It can be optimized to push the boundaries of AI development.

When Fine-Tuning You Need

Once the model is developed, fine-tuning allows it to perform specific tasks. Fine-tuning performs best after pre-training. Overall, fine-tuning is the process of optimizing the training data. Consider fine-tuning when:

- Fine-tuning helps machines perform specific tasks rather than handling general tasks.

- The cost of fine-tuning is lower than pre-training, and it can also be done with fewer resources.

Ultimately, it depends on the purpose you choose to enhance your AI system. Pre-training models are foundational, while fine-tuning specializes in training the ML models for narrower and more specialized work. As we discussed, pre-training becomes necessary when you have a gigantic volume of annotated data for your support. Whereas fine-tuning does not require that much annotated data, it requires knowledge and skills to fine-tune the machine learning models.