In This Article

Wish you could say a spell to get all your files compiled in order!

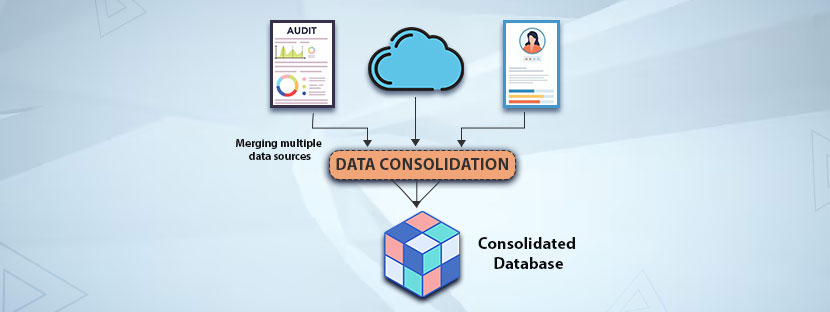

Merging data from different files and making a consolidated database out of that is a really hard task. No magic spell works here. Sadly!

But having an integrated database is excessively important for every organization to have in the present day. It’s important because scattered and inconsistent data spread across different databases creates a mess. Ultimately, it would make your organization fall into a decision-making trap and develop a silo culture.

Come out of it. Merging multiple data sources to create a single source of truth (a consolidated database) is not a big deal. Especially when you have a complete blog on this. Use this piece to extract all the information that you must know to consolidate all your data.

Okay to start now?

Types of Data Consolidation

Data consolidation is the process of integrating data from multiple sources into a single location. But the format of the data in most cases was found to be different. Hence, the data consolidation process differs based on different data types.

Database consolidation

Simply, it means merging multiple databases into a single and unified database, and that is the literal meaning of database consolidation. Consolidating the database improves data accessibility. It helps data users to find all kinds of data in a single platform. Moreover, it eliminates the silo culture in the data architecture.

Applications → Suitable for organizations that have data spread across multiple departments, applications, and regions. Data users across all departments can easily locate all the relevant data using the consolidated database.

Application consolidation

It focuses on integrating various software applications that are being used within an organization. It aims to develop an inclusive view of business operations, customer engagement, and employees.

Applications → CRM, ERP, and HR software are the prime examples of these types of consolidation processes.

File consolidation

File types and formats to store all kinds of information can be different. File consolidation solves file conversion challenges as it unifies data stored in multiple file formats. Be it a spreadsheet, CSV, or any type of document. Data is getting flown into the system consistently after converting multiple file formats.

Applications → Having a database that unifies all types of data is the ideal fit. This type of consolidated database is ideal for organizations that collect data in different formats.

Financial consolidation

Provides an overall view of the financial health of the organizations after combining all financial information in this type of consolidation process. It takes over various processes and reports related to financial processes to prepare the consolidated database.

Applications → Businesses working with multiple subsidiaries that need unified financial reports require this type of database consolidation process.

Cloud data consolidation

As the name suggests, it includes merging of different cloud platforms and services into a single cloud storage. It helps centralize all the stored information and streamlines the data integration process across all cloud platforms.

Applications → Suits best for those organizations that demand strong security for their cloud database.

Data warehouse consolidation

Preparation of a centralized data warehouse is the single aim of the data warehouse consolidation process. It supports complex data integration requirements, pushes insightful reporting, and drives the best decisions for the organization.

Applications → Perfect for organizations that need a unified and consistent system for data reporting and assessment.

Used Cases of Data Consolidation

Data consolidation simply a process of unifying multiple forms of data into a single source. The method involves changing the format of the existing data and storing it in a defined database using a unified data format. This allows for to creation a centralized platform for smoother access to all data. Adding up different types of data into a centralized platform can easily turn raw data into actionable insights that drive better decision-making.

Now, let’s understand the core applications of data consolidation in different fields.

◈ Retail

Businesses in the retail industry need an integrated database for handling various operations. Mainly, retain store handle data from online sales, in-store purchases, inventory measurements, etc. Besides operations, data comes from marketing channels, which is also essential for retail sales. Therefore, integrated or consolidated retail databases help optimize retail operations and boost personalized marketing efforts.

◈ Healthcare

Consolidating patient records streamlines the diagnosis process. All patient information, lab reports, medical reports, and other relevant data come together into the consolidated database. It helps healthcare service providers as well as patients to keep a track of every single procedure.

◈ Financial

Combining transactional data and customer profiles helps financial companies to assess risk profiles and maintain compliance. Financial organizations consistently require a comprehensive database to track financial levels, monitor investments, and make informed financial decisions.

◈ Manufacturing

Merging production data and supply chain metrics is important for manufacturing companies to track their operations. Data consolidation acts as a bridge to simplify the operational procedures of large manufacturing companies. Mostly, these companies have fragmented datasets spread across multiple processes. Consolidated database helps them channelize the process.

Difference Between Data Integration And Data Consolidation

Two terms, “data consolidation” and “data integration”, represent the same meaning, but there is a sharp difference between them.

And the difference lies in the process itself. Data integration connects data from multiple sources in real-time for operational usage. On the other side, data consolidation merges data into a single repository for analysis.

From this very difference, we can determine that data integration supports ongoing updates, whereas consolidation creates a stable and unified database.

Interestingly, data integration creates a unified view without relocating the data in physical terms. Imagine this: data integration builds a bridge between two islands of data. One interface is there that tracks the exchange of data in real-time between those islands using the bridge. Technically, ELT, ETL, virtualization, etc, processes are a part of the data integration process.

On a different note, data consolidation works in real terms. It physically brings out the scattered data altogether under one roof. Data warehouses, data lakes, cloud systems, etc, are examples of consolidated databases. Consolidating data into one platform improves governance measures and maintains the consistency of the operations.

Easy Ways To Merge Multiple Data Sources

Merging multiple sources into a single database requires a systematic approach and diligent follow-up.

To clarify, I’m highlighting all the essential steps you need to follow to merge your data. These steps are interrelated; therefore, you must follow them in the same order that I mentioned below.

➥ Identify data sources

You must have all the data that you want to integrate before starting the process. Make sense? So, identifying the data sources simply means keeping your databases handy that you’re going to consolidate. These data can include APIs, structured data, or even non-structured data etc. Besides source identification, you also have to understand the data structure of each of your data sources for the next step: mapping.

➥ Data mapping

Make full use of your data by setting up relationships for metadata. Before you put your data into a data consolidation tool, you have to do the data mapping tasks. You need to ensure that there is a constant schema installed in the target system. Most probably, it is your data warehouse. Remember, you must maintain consistency in data formats throughout the data warehouse. Otherwise, the relationship between all the other datasets will be impacted.

➥ Extract and Transformation

Once you’re done with the data mapping part, you use the ETL or ELT pipeline to extract data from your selected location or data sources. If you apply ETL, you would transform the data after standardizing it. Removal of duplicate files is another process that comes along with this. You can amazingly create a data pipeline using ETL or ELT procedures. Consolidating the entire dataset is the main goal of the data pipeline. However, the data pipeline may have other goals that drive the decision-making capacities.

➥ Load your data

After extracting and making the necessary transformation, your data is fully ready to be loaded into your set system. Name it a data lake, or data warehouse, or anything. Based on your data pipeline (ELT or ETL procedure), your data will be transformed wherever needed. Ultimately, the data migration needs to be done smoothly, without facing any downtime.

➥ Merge & Integration

Once the loading process comes to an end, the process of merging and integration gets started. Following the path of data mapping and setting up transformation rules can make data merging easy and effective. One thing you should always make sure that there are no duplicate records in your database after merging multiple datasets.

Always make sure you proceed with the consolidation work following the data cleansing methodology to have a duplicate-free database creation. Most of the duplicate records in the consolidated database appear when two sets of data get merged (especially tables). So, keep checking all your databases to find and eliminate duplicate records.

➥ Verification & validation

Are you using any tools to validate your data?

If you do, then please use those tools to verify and validate all your data to meet quality standards. Creation of a consolidated database must be done considering all valid and verified data. Hence, you need to perform constant testing of all your records to ensure efficient queries and operations. Getting some experts who have the right skills to verify and validate data is also a good option in this case.

➥ Create a unified data storage

The main aim of creating a consolidated database is to store all your data in a centralized repository. For that, we need to choose a compatible storage solution depending on your business and performance needs.

Consider these essential areas when you choose your storage to keep all data.

- Type of data, including formats and subject

- Volume of total data

- Number of users

- Needs for retention

- Query needs

- Your budget (most important)

The size, capacity, and security level of your data storage depend on the above-mentioned factors. Hence, consider them with your serious attention as much as possible.

Connecting Data Consolidation With Other Data Management Functions

Data management is wider and involves various functions. When you consolidate all your data sources, you need to perform every single data management task perfectly. Here is a glimpse of how you can use your consolidated database to handle various data management tasks.

Master data management using a consolidated database

A consolidated database that stores all consistent information is excellently useful for the master data management process. The master database contains golden records, which are essential for every organization. A trustworthy consolidated database is essential for storing highly important master datasets.

Managing data quality in a consolidated data environment

Keeping high-quality data in a secure database is a priority. A consolidated database provides the best environment to maintain data in the highest quality. It involves consolidating various forms of data into a single format, which helps create a comprehensive data environment across the organization.

Challenges You May Face While Merging Data

Merging multiple sources is no less than a big challenge. Every data source will come with a unique obstacle that you need to handle with precision.

So brace yourself to face challenges!!

The following challenges you can encounter with when you keep merging your databases.

Data heterogeneity

Don’t assume you’ll get all your data in a uniform format. Each data type comes with a different format, and you have to accept it. Before merging your datasets, you need to make sure that all your data is in the same format. Homogeneity of all data formats is essential for merging all the databases.

However, businesses encounter data heterogeneity while merging raw data. This heterogeneity can be explained into two types.

i. Structural heterogeneity

Structural heterogeneity occurs when data from different sources are organized in varying formats or structures, making it difficult to merge them into a single, consistent dataset.

For example, one system might store customer details as “First Name” and “Last Name” in separate fields, while another system keeps them together as “Full Name.” These differences in table structures, schemas, file formats, or data models cause confusion during integration.

In real-world scenarios, structural heterogeneity often arises when combining data from multiple departments, legacy systems, or third-party vendors. Even when the data refers to the same entities, variations in how it’s stored, like different column names, data types, or hierarchical arrangements; create mismatches. To resolve this issue, data mapping, schema matching, and transformation tools are used to align structures.

Using standardized data models, consistent formats, and clear documentation can also reduce these inconsistencies. In short, structural heterogeneity is a major technical barrier in data merging because it focuses on how data is organized, not just what it contains.

ii. Lexical heterogeneity

Lexical heterogeneity refers to differences in the naming, labeling, or representation of data values across systems. In simpler terms, it’s when the same concept is described using different words or symbols.

For instance, one database might record “Gender” as “Male/Female,” while another uses “M/F.” Similarly, one dataset may use “USA,” another “United States,” and a third “U.S.” — all meaning the same thing but represented differently.

These variations make automated data merging difficult because systems can’t easily recognize that the values refer to identical entities. Lexical heterogeneity commonly occurs in organizations with multiple databases developed independently or sourced from different vendors. To overcome this, data standardization, synonym dictionaries, and reference data mappings are applied to unify labels. Advanced methods like Natural Language Processing (NLP) can also help identify context-based similarities.

Scalability & Flexibility

When starting the data merging process, it has always been a fixed number of datasets that need to be merged. Therefore, the merging and implementation plans are getting framed accordingly based on the fixed sets of data. However, the main challenge comes when there is a sudden need for datasets that need to be merged within a fixed timeframe. A system that can easily iterate additional data sources with varying data structures and storage mechanisms is the current need.

So how can the scalability issues be resolved?

Implementing a scalable integration architecture is essential at this point. Build a data integration architecture that can pull data from a wide range of sources, like SQL databases, APIs, or ETL. Therefore, it would become strong enough to support various data formats. It includes formats like JSON, XML, usual text files, and others.

Remember, you need to integrate things gradually over time. Avoid hard-coding the merging process, as it would spoil the integration process. Design the integration process in such a way that it can easily connect to other data sources whenever needed. Making it flexible and open to accept other data sources is ideal here.

Data can be duplicated

One record may get entered into the database multiple times if there is no element to deduplicate the datasets. Ultimately, it will become a challenge if duplicate records get piled up into the database for a long time. However, multiple records representing the same entity may not have unique identifiers or may be have. We never know that until checked. But for this time, we need to ensure no double records representing the same unique identifier twice into the database.

As a solution, using relevant data matching algorithms is a great process to identify duplicate entries. Deploying popular data matching techniques can be another best ways to save your database from duplicated data. Plus, add conditional formatting rules to intelligently assess similar data columnes or attributes that contain valid values. Data matching will ensure that you have a consolidated duplicate-free data into a single and unified form.

A long merging process

Data consolidation processes usually take longer than usual.

Do you know why this happened? We have pointed out the following reasons behind this.

▶️ Inefficient planning

▶️ Unrealistic goals

▶️ Frequent changes at the last minute

A thorough evaluation of all your databases is needed before you develop a roadmap for data merging and consolidation. Making last-minute additions (anticipated) makes the merging and processing process unnecessarily complex.

Velocity of the data

Representing the speed at which data is being generated, maintaining data velocity is a critical aspect of the fast data-moving process. Constantly pushing data in real-time is crucial for maintaining a functional data processing system. For that, the consolidated database should maintain a high velocity of data through a consistent and accurate flow of data into the system.

To solve the matter, installing high-stream data processing engines like Apache Flink or Kafka can help handle a huge portion of data. These are real-time data processing frameworks that can easily handle high velocity of data. Plus, this framework enables continuous data synchronization. It means all your data will get distributed across all your data channels without any data loss, and at the same time. Also, you can integrate a high data buffering mechanism to ensure all your data streams are running in parallel with full efficiency.

Data synchronization issues

Keeping all your data accurate and up to date is a major challenge when it comes to consolidating a large volume of data. Data processors also refer to this as data synchronization issues. Issues happen when you keep a track of real-time updates, insert or delete data in existing data cells in real-time. Keeping all your data fields synced with accurate information is the ultimate goal of the data consolidation process, right?

That’s why we use the best of change data capture tools to track incremental changes in the source system to ensure all updates are getting reflected evenly in the merged datasets. Further, we set various filters like log-based, trigger-based, query-based capture, and other types to bring excellence in data synchronization and modification.

Furthermore, integrating change data capture into the ETL pipeline makes data consolidation faster and syncs all datasets in real time.