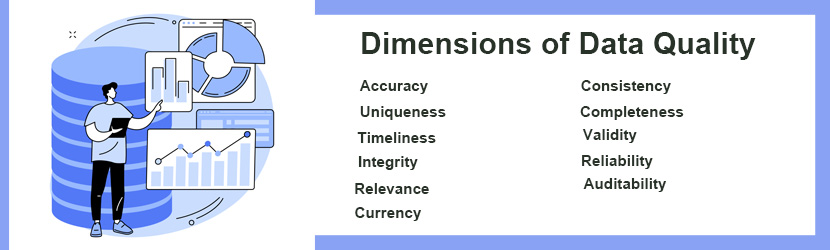

Data quality parameters basically work as qualifiers. It checks whether your data is mature and dependable enough to believe in or not. Hence, you can make strategic decisions based on that.

Here we have detailed the dimensions of data quality in detail.

Quality Parameters for Data

- Accuracy

The first quality parameter for data is the accuracy or correctness. You have to ensure that the data you have is right. Otherwise, all your efforts will be going into vain. To realize the actual value, the removal of poor records, redundancies, missing values, etc, is essential. Reaching the true data is not a tough deal, but the right measures.

Organizations that prioritize data accuracy always make the right decisions despite the tough times. Because accurate data helps them make informed decisions to achieve better business outcomes. Plus, it helps foster stakeholders’ trust in the organization and its workings. The aim here is to increase the database accuracy over time, as the datasets will continuously increase as the organization grows.

2. Consistency

Suppose you have been asked to make phone calls to customers to ask them a certain question and get their feedback. For this, you have been given access to the customer data. You logged into the database. The first thing you notice here is that the phone numbers of the customers are not arranged in a uniform format at all. Basically the database you got is inconsistent.

Maintaining data consistency across channels is essential. Consistent data is the percentage of value that is matched across the data platform. A business can achieve a higher form of success if it holds consistent data. A consistent database produces meaningful outcomes and supports business analytics.

3. Uniqueness

Each piece of data entered into your database must have unique values. When the two datasets cover the same value, it produces duplicate records. Hence, uniqueness is the only way to maintain the database intact. Sometimes values do not match, but intentions do match. In either case, it breaches data uniqueness. Let’s understand this with the following example;

Suppose you extracted two sets of customer information from the same location, which look like this:

Data from Location One

Name: Alex Jackson

Address: Jacksonville

Email: jack24@gmail.com

Data from location Two

Name: Jackson A

Address: Jacksonville

Email: jack24@gmail.com

As you can see, the name in both locations is different, but the other information is the same. Probably, the names recorded from two locations are indicating one person. Just check the address and email, both are the same. This mistake usually happens during the data entry phase. You have to keep a close eye while data recording to avoid such a mistake. Maintaining the uniqueness of data can help you prevent data duplication.

4. Completeness

The cost of poor data is huge. However, more than 60% of companies are there that do not calculate this cost. In most cases, it was found that these companies rely on incomplete sets of data, which causes damage to their analytics.

Hence, in order to increase the quality of your data, you have to ensure that your datasets are in complete format. For example, if you are dealing with customer data, then you have to maintain a complete set of customer information. This includes customer’ first names along with their last names, and other relevant information.

A complete set of data is essential to get accurate insights, and obviously, in marketing and promotions, too. Suppose your marketing team could only collect the first name of your targeted audience. Hence, you send emails to them without the recipient’s last name. Your email marketing strategy may fail because of this. Addressing customers by their full name is the thing that can bring marketing success. That’s only possible when you have complete information about your customers.

But that’s not the only thing that defines a database as complete. Besides the name, a marketing database (for example) should include emails, address, preferences, income level, and other important stuff to become complete.

5. Timeliness

In the digital era, everything is changing every other day. For emails, people are switching to new email IDs every 2 months (on a large scale). A similar thing is happening with phone numbers, too. On the other hand, people are relocating every hour across nations. Hence, your marketing database should incorporate a constant data updating mechanism to reflect the changes. Timeliness as a metric of data quality maintains updating your database on time.

6. Validity

Does your data fit your targeted range?

The validity of data defines how much value your data can provide to your purpose. For this, you need to check whether your database is arranged in a logical manner or not.

For example, a database for a retail store takes up a quantity field in the range between 0 to infinite positive numbers. But it cannot take up any negative number (like -2, -56, and so on). It shows how logical and valid your data points are. The validity of your data increases the quality of your outputs.

7. Integrity

Data integrity is all about connections. It showcases how your database is connected or interlinked with other data patterns. Data integrity in another sense ensures a smooth data journey. It helps data move across the platform and touch every data point. In essence, the positive side of having an integrated database is that you can trace your data and establish connections between datasets.

Data integrity is one of the core parameters of data quality. Besides, an integrated data system develops business intelligence to represent how an organization understands and utilizes the data it has acquired. Identifying opportunities to fit and use the data where needed for improvement is the way to make the business better and relevant.

Most of the organizations (almost 70%) have now switched to cloud-based platforms to store their data. It provides them with instant access to the database.

8. Reliability

A dataset that brings true value and provides accurate results when tested through multiple platforms can be called reliable data. Having a reliable set of data is extremely essential for making rational decisions. Reliability of the datasets increases trust, operational efficiencies, and reduces the risks associated with inaccurate values.

Reliable data should not contradict when checked in multiple resources. For example, if one of your customers’ birthdays is recorded as 23 January 1989 in one database, yet it’s 23 June 1886 in another, then it indicates the information is completely unreliable.

A dataset that brings true value and provides accurate results when tested through multiple platforms can be called reliable data. Having a reliable set of data is extremely essential for making rational decisions. Reliability of the datasets increases trust, operational efficiencies, and reduces the risks associated with inaccurate values.

Reliable data should not contradict when checked in multiple resources. For example, if one of your customers’ birthdays is recorded as 23 January 1989 in one database, yet it’s 23 June 1886 in another, then it indicates the information is completely unreliable.

9. Relevance

Check whether the data you collected is serving the purpose or not. If yes, then your data is completely relevant. Data relevance is one of the data quality parameters that ensures the data is serving a meaningful purpose. Therefore, setting up specific goals, objectives, missions, etc, is necessary as well as helpful to stay relevant. Most importantly, these things should be done before collecting the data.

For the best business outcomes, collect data that serves a purpose and is aligned with your business goals. Staying relevant with data allows organizations to perform more efficiently, extract valuable insights, and drive relevant business decisions.

10. Auditability

Transparency in the data alteration process is essential to maintain data quality. Auditability is a metric that tracks percentages of data fields where edits are made. It reveals the percentage of altered data, finds the data gaps, re-evaluates untraceable data, and disassociates data. With data auditability, you can check what and when edits were made and by whom without any implications.

It helps organizations to trace the history of data updates strategically.

11. Currency

There’s something bigger to play in and around the database beyond accuracy and relevance. This knows a currency of the data. It showcases how the data is collected and updated. The data currency is high if the data is up-to-date.

A current set of data (accurately updated) is considered good-quality data. Constant updates of the old records and maintaining the quality parameters help organizations to increase data currency. Plus, keeping the database up-to-date helps organizations adapt to the rapidly changing business environment and make a distinct place in the market.