Training AI models from scratch is time-consuming, isn’t it?

Adapting the AI models to the nature of your work requires time and the right attitude. What if you teach your AI models without spending much time on them? Yes, it is possible when you consider in-context learning.

Thus, you can bypass the fine-tuning process and start working with your AI models directly without providing them with additional training. EASY!

Well, in-context learning (ICL) is like providing your AI models with a cheat sheet and asking them to follow it diligently. This process majorly differs from other processes as it does not fall under a “one-size-fits-all” curriculum or the traditional prompting methods. It goes beyond the concept that is already known and trains your AI models without your efforts.

Let’s have a detailed discussion on ICL to check what it is and how this system works in this blog. We’ll also keep checking on some real-life examples to understand the concept in a better manner.

What in-context learning is all about?

ICL, or In-Context Learning, is a specific prompt engineering method where task demonstrations are provided to AI models as prompts. This method trains models without doing the fine tuning part.

In detail, in-context learning is a new paradigm of learning where the model is fed with normal data as if it were a black box. The input to the model describes new tasks with some possible examples to make the output as learned. In-context learning is possible in autoregressive language models such as GPT-3 and other GPT families.

Unlike any supervised model, ICL operates without updating any existing parameters. It executes predictions using pre-trained language models. That’s why it does not require fine-tuning of the models.

Interestingly, in-context learning (ICL) is also known as few-shot prompting. This method is extensively strong as it can exploit pre-training LLM data. Because of this, it can comprehend and execute any tasks and perfectly fit into machine learning architecture.

How in-context learning works in LLM

Learning from analogy is the key idea behind in-context learning. It’s a principle that generalizes the entire system with a single example. In LLM, the in-context learning technique sets prompts to provide descriptions to the model. Basically, with some input-output examples, this technique provides the required training to LLM models.

Now, think of any traditional machine learning model. Okay, let’s take the example of linear regression. This model needs a good amount of trained data and a separate training process. Accurately performed training data is crucial for the development of a good AI system. With the help of skilled data annotators, you can bring success to your AI/ML models. When you work with in-context techniques, it requires nothing.

ICL gets powered from its pre-trained datasets. This allows the model to handle complex tasks with the help of minimal input.

Approaches to ICL

There are various approaches available to you to develop in-context learning. In sum, you can take the following approaches:;

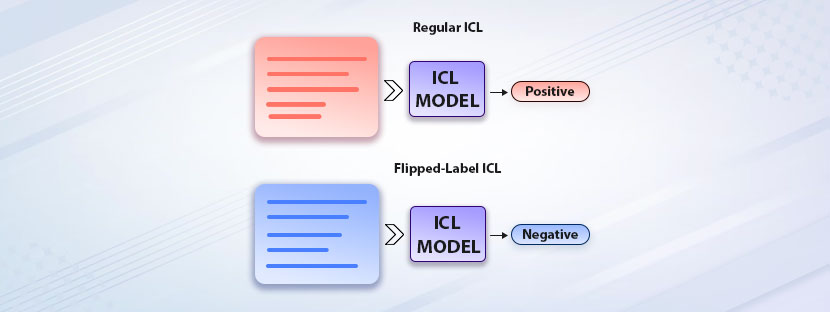

Sentiment Analysis

With the addition of a few prompts, LLMs can perform sentiment assessments of written languages. It can help models to determine the sentiment of the text (positive or negative). Without explicit training, ICL trains data with the help of some prompts. Through this, the sentiment analaysis model can perform customer feedback analysis, social media monitoring, market research, etc with complete strengths.

Language Transaltion

Again, with a few input-output prompts, any LLM model can perform language translation. It is bridging communication gap, especially in the context of global businesses. The ICT-enabled prompts is helping LLMs to work instantly with the translation.

Code Generation

By feeding few samples of coding problems and their solutions, the LLM models can generate new codes. It can perform up to a certain level of coding and help for the development of software. With the integration of this model, the time and effort spent on manual coding can be minimized.

Custom ML Models

Some machine learning models require an elaborative training, and some may require only a few. With in-context training, custom training is also possible. Rather than spending excessively on training resources, ICL can handle custom data needs, and it adapts to changes quickly.

Medical Diagnostics

ICL also helps develop medical diagnostics AI models that assist doctors in delivering timely care to patients. By providing the LLM with some medical symptoms and their corresponding diagnoses, ICL prepares the model to act as a medical professional.

Limitations of ICL

As we all know, not a single machine learning training model can cover everything. Thus, it’s natural that ICL (In-context Training) comes with a few limitations, too. The results of ICL work best if the scale of operation is limited. It might need a strong technique or fine-tuning if the size of the LLM operation is large. Apart from that, this technique is limited to a set of domains only to get the optimal results.