Posting inappropriate visuals on social media deserves harsh punishment. But before that, moderating the images before they get published is very much required. For this, automatic image moderation tools are there to help moderate images faster.

With the increasing number of internet users, the role of image moderation is reshaping itself. Now, businesses need quick moderation instead of waiting for a few hours to publish just one photo. AI-powered automatic image moderation tools can also quickly moderate images in bulk. But wait, there’s more.

Let this explain how moderation tools can help businesses better to moderate images faster and related matters in detail.

What Is Image Moderation All About?

Not just about inappropriate images, but the image moderation process removes low-quality images. At present, computer vision models are doing a great job removing unsolicited content from social media and other platforms.

Social media platforms and other areas where people generally post content have moderation guidelines. These guidelines help to maintain community safety and legal compliance. Basically, content or image moderation is important for the following reasons;

Safety of the Users: Moderation acts as a shield that protects users from explicit content.

Brand Integrity: Top global brands mostly deployed automatic image moderation tools in their social space, especially in comments. It helps them to get unsolicited content out of their space and maintain their brand reputation.

Legal Compliance: Hosting explicit images on any social platform is against the community guidelines. It violates community guidelines, so image moderation is extremely important here.

Methods to moderate image content

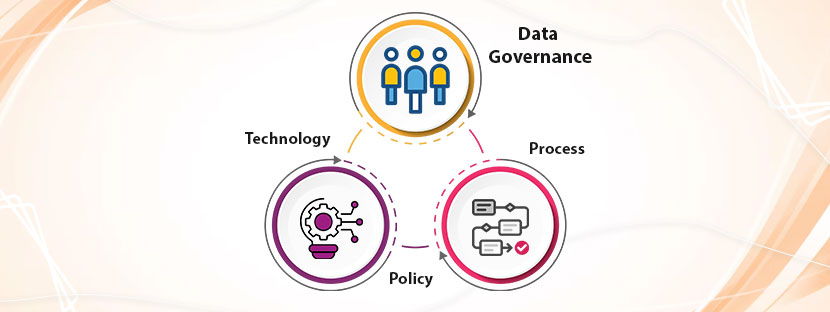

Image moderation is more like a technical task rather than just selecting the images. In general, we have two methods to moderate image content, and the combination of these two methods is always required.

Manual Moderation

This method is quite old here, human moderators basically decide which images to select and which to reject. They follow some standard practices in order to moderate images. Only skilled moderators can perform moderation tasks. The required skills to moderate images also depend on the platform where moderation is happening.

Manual image moderation follows the guidelines laid by community standards. Plus, standard privacy laws are also there to help human moderators perform their jobs.

Automated Moderation

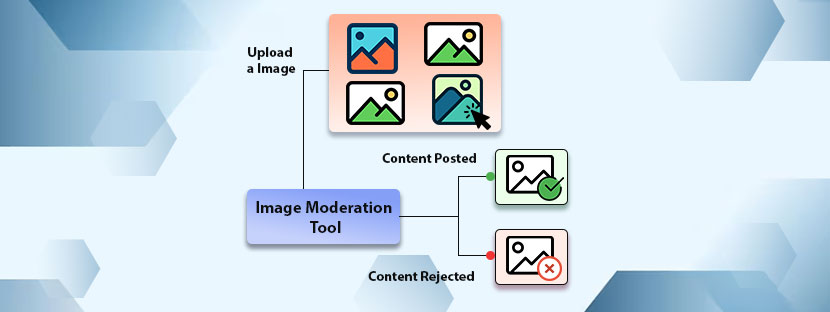

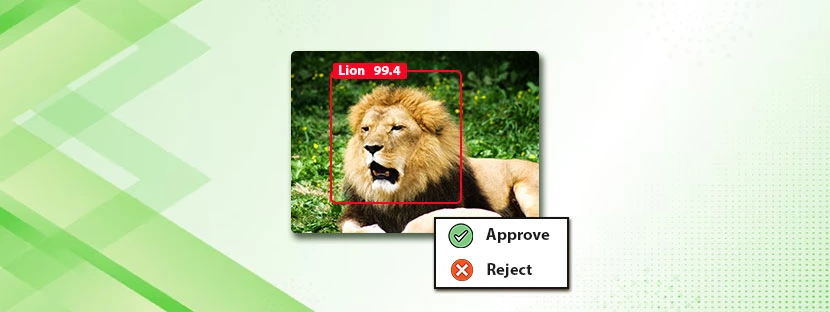

Using automatic image moderation tools is quite common nowadays. Most of the big companies use them to moderate their comment sections. In this method, the API (Application Programming Interface) performs the moderation tasks. Image moderation training data has been fed into the API system to perform the moderation work.

Automated image moderation acts in the following way to filter out image content;

Reasons your business must try automatic image moderation tools

Artificial Intelligence is booming across all the spheres of technical operations. It makes processes super powerful by automating them. Image moderation, a decade ago, was a subject of manual labor. But now, AI-based software can completely automate the moderation tasks. If your business still has not tried automated image moderation technology, it’s high time for you to do so.

Here are the main reasons why every business must adopt automatic image moderation tools to perform moderation tasks.

Moderating High-Quality Images

Moderation does not necessarily mean rejecting the explicit content from Images. It also indicates rejecting low-quality images that too using automatic image moderation tools. They can automatically pull down low-quality images that degrading the brand’s reputation. Automatic tools are definitely better than manual interventions in terms of quick response.

Therefore, with the incorporation of automated photo moderation tools, businesses can maintain quality standards of all their images published across all their platforms. With strong API integration, these tools can also correct image quality instantly.

To Showcase Clean Content Only

For a brand, it is very important to publish content that is child-friendly. Otherwise, it will face opposition from the audience. However, some automatic image moderation tools come with an in-build option that ensures publishing only clean content. Clean content means simple content that can be played in any space without any disclaimer.

Again, with the best use of image moderation practices, businesses can generate and publish clean content for their audience. Automated tools always bring out the best version of content and publish it accordingly.

Verifying Image Content Instantly

The best thing about automatic image moderation tools is that they can moderate images in real-time. So, it has the power to make the internet safe through moderation. Unlike any other moderation techniques, these tools provide instant moderation of any type of image. You don’t have to wait for a long time to get your images moderated to be published.

In most cases, AI-powered image moderation tools can take up to 48 hours to moderate images. One more thing: You can integrate these tools into your existing platform for seamless verification of images in real-time. If you’re hosting a platform for your audience, this would ensure more customer engagement on your platform.

Best Practices of Image Moderation using automatic image moderation tools

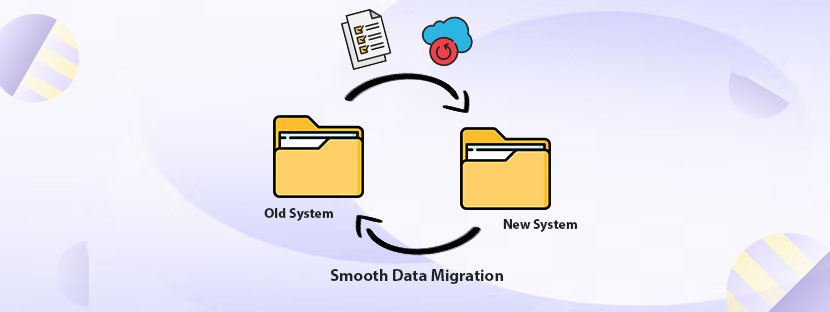

Following moderation, policies are extremely important when it comes to moderating images. Each platform has different rules regarding moderation. Therefore, using one tool might not help for all the platforms. You need to mix and match strategies to get the best thing out of the framework. Here, manual moderation using automatic image moderation tools suits the best.

You can deploy the best practices of moderation if you combine both methods. Human moderators can regularly train your AI tools to recognize new types of inappropriate image content. Using the best of AI-powered moderation tools, human moderators can report in real time. Other than that, this would ensure accurate moderation auditing and getting all feedback on time.