In This Article

Most organizations, after going through so many processes and spending huge bucks, conclude that having a single source of truth is important.

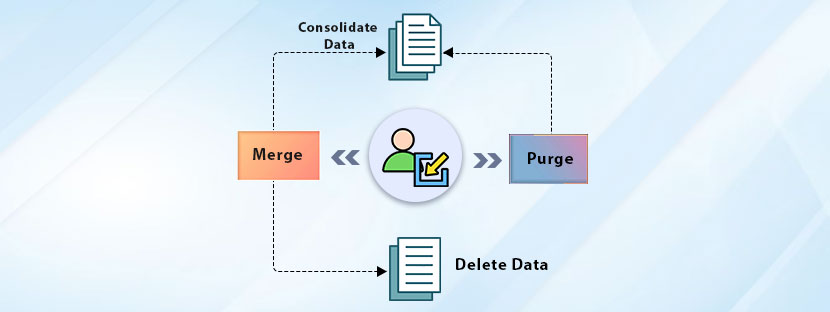

Hope they could realize this before. However, merge purge is the process that helps organizations maintain a consolidated “truth” database.

“The future of your business depends on the integrity of your data.”

If your data is not telling the truth, then you’re business is in trouble. You need to take immediate measures to bring truthfulness to your data. Merging and purging are the processes that you need to consider for maintaining data integrity.

In the following section, we’ll explore the key steps that you need to make the right decisions when purging or merging your data.

Let’s dive in!

The true meaning of “merging & purging” of data and its importance

Drive your business forward with confidence and clarity. Data integrity must be there for that.

Merging simply means bringing all your information spread across the system together. It creates a unified system with your data records. The process of merging the data should be done carefully; otherwise, it will create issues.

When merging databases, one thing that must be ensured is that you don’t lose any crucial details. Avoid creating conflicts between records as much as possible.

How do you know you merge data well?

When the data is merged correctly, it will provide a complete and accurate picture that allows you to make informed decisions regarding your business. In case the merging process is done incorrectly, it will lead to errors, confusion, and mistrust within your database.

Purging, on the other hand, is like the end process of merging. Merging focuses on bringing all data together, while purging ensures there are no duplicate records remaining in the database. Besides duplicates, purging removes outdated and irrelevant information that weakens your data strength.

“Merging and purging” both processes run hand in hand. Applying both processes, you can ensure you have the most accurate, relevant, and valuable data within your database. You can also get merging and purging done on your data if you want to improve the quality and reliability of your data to the next level.

Merging becomes important when…

Do you want to maintain complete accuracy and consistency in your data records?

If you say “yes”, you need to consider applying the merging process. Merging isn’t just an option; rather, it’s necessary when it comes to maintaining ‘truth’ to your data.

Here’ the few trigger points when you should consider merging your datasets.

Consider the following points as symptoms that you need to start the data merging process immediately. Among the points, if you face any of them, you should not delay. One thing you must remember: your data should tell the right story. Anyway, let’s discuss when you should start merging your databases.

You’ve encountered duplicate records

When multiple records appear in the same database, it creates a mess, honestly. Therefore, merging those records makes a flow in the process. Keep your files duplicate-free as much as possible, and that is the mantra. If you have encountered duplicate records in multiple locations, you need to consider merging your databases immediately.

When you merge your databases, all your records will appear in one location. Therefore, you can easily spot entities that are done twice in the main database. Keep your database on track when you consider merging to spot duplicate records. Thereafter, you just need to pick up the duplicate records and remove them from your database. That’s it.

Some records are incomplete

Although you have all the necessary information at your organization, but not in a compiled format. Therefore, it would kill your time unnecessarily to find out the exact information that you need for that moment. Ever faced that situation?

When all your databases are integrated, you can easily find any kind of information that you need. Let’s understand it using an example.

Your database is inconsistent

Data floating across your system should remain consistent. If the flow gets paused, it would create mistakes and misunderstandings within the process. How could you know that your data is inconsistent? It’s simple. You need to check whether you can find your records easily or not. If not, you need to consider merging your databases to make the data flow consistent.

Having a uniform database reduces confusion and improves the accuracy rates of your datasets.

Confusion regarding historical data

The integrity of maintaining historical records is crucial. It allows you to perform trend analysis and compliance more accurately. Sometimes, legal mandates are there at the time of handling historical data. So, it’s always better to keep your historical data integrated with your existing database.

Merging your historical data with the existing system is the best thing you can do. It saves you legally and compliance-wise, also.

Data purging gets prioritized when…

Do you know what “purge” means in literal terms?

It simply indicates “delete the records”. Sometimes deleting your records can help you maintain a relevant, accurate, and compliant database. Therefore, when the quality and efficiency of your operation is a stake, you need to initiate the purging process as soon as possible.

We have pointed out that when purging as the process can become highly important at your organization.

i. Removing obsolete data

The very nature of data is to expire after a certain date. After the expiry date, data becomes completely obsolete. You can detect obsolete data easily without checking the data expiry date. For example, old transactions, discontinued products, irrelevant customer interaction, etc, are of no use as such. These make obsolete data.

Keeping obsolete data makes systems run slowly. Storage is expensive and limited. Organizations perform functions using limited data storage. In the middle of that, if you keep adding obsolete data into it, this could lead to a blunder. Your important data will run out of storage.

Thus, purge your data whenever possible to make your database clean and free. It will create more space in your existing database and save you from financial burdens.

ii. Ensure the highest data privacy

Data privacy is a mandatory element within the regulatory environment. Data privacy laws should be maintained tactically in order to maintain the complete privacy of your data.

For your reference, as per Art. 17 of GDPR, the right to erasure is established by the law. Organizations working with customer data should delete the data after a fixed date. Otherwise, imposition of a high fine is there for the organization. Therefore, you need to consider a data purge if your organization is working with sensitive customer data.

Purging data following the rules and regulations is important for organizations to function properly. Timely purging reduces legal risks. And, it will protect your organization’s reputation as well.

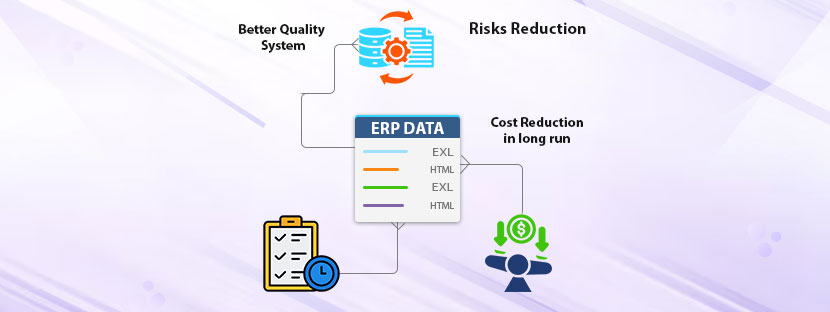

iii. Improve data quality

Data itself comes in different qualities. Considering the bad quality of data for your analytics can skew the results. If you want to extract the best results from your data, you need to consider putting true values as input. Ensure that your data does not have inaccuracy, unreliability, or any form of impurity. Purging will omit everything from your data

When you remove bad data, you’ll welcome accurate insights based on the accuracy and trustworthiness of information, which are critical to make informed decisions. Purging is a regular process; do not consider it as a special process that needs only one-time assistance.

Now imagine you’re managing a database that contains records from a product that you discontinued selling last year. Naturally, the data of that product has no relevance today at your organization. There’s nothing you can do except keep them deleted from your system. Keeping this data will unnecessarily kill your space and make your analysis weak. On top of that, you have to invest a good amount to keep the database active. Purging will remove everything without harming your existing process.

Basically, purging is a clear-cut process to keep your data sharp and effective and prevent your organization from making costly mistakes.

Prime elements you can’t miss checking before doing merging and purging

⧐ Source Credibility

Before any merging or purging begins, the first responsibility is to ensure that every dataset you’re working with comes from a trustworthy source. Data that has been collected inconsistently or maintained poorly can distort the entire outcome of a merge.

Assessing credibility means confirming how the data was captured, how often it is updated, and how it has been stored. Merging unreliable sources does not just create inaccuracies; it amplifies them. When the foundation itself is questionable, no amount of cleaning later can fully correct the flaws that were never addressed at the start.

⧐ Structural Compatibility

Even if the data is credible, structural inconsistencies can still ruin a merge. Different teams, tools, or time periods may use different naming conventions, data types, and formatting rules.

One dataset may list “Customer_Name,” another “Name,” and another separates first and last names completely. If these structures are not aligned before merging, records get mismatched, fields map incorrectly, and the final dataset becomes unreliable. Ensuring structural alignment in advance gives the merge a friction-free path and avoids avoidable errors that stem purely from format differences rather than actual data issues.

⧐ Identifier Standardisation

Identifiers are the anchor points that connect records to the correct person, product, or entity. When their formatting varies, matching becomes inaccurate. One file may use lowercase emails, another uppercase; one may include a country code in phone numbers, another may it; one may abbreviate product SKUs, another may not.

Without standardisation, your deduplication system may treat identical individuals as separate entries. Ensuring consistency in identifiers allows the merge to accurately recognise true duplicates rather than superficial differences caused by formatting.

⧐ Accuracy and Completeness

A merge is only as strong as the accuracy of the data feeding into it. Missing values, invalid entries, and outdated information silently damage the quality of the merged result. If you merge first and clean later, you risk locking in flaws that would have been easier to resolve at the start. Pre-checking accuracy helps expose unusual patterns, inconsistent fields, and missing data that could influence analytics, reporting, or customer decisions. This step acts as a filter that protects the larger merging exercise from inheriting avoidable defects.

⧐ Deduplication Logic

Effective purging depends on clear rules that define when two records truly represent the same entity. Without these rules, you either delete legitimate entries or keep unnecessary duplicates. These facts underline why deduplication must be rule-based, consistent, and carefully executed;

⧐ Conflict Resolution Rules

Once duplicates are identified, you must decide how to handle conflicting information. For example, one version of a record may have a newer address, another may have an older but more complete profile. Establishing which source is more authoritative prevents inconsistencies in the final dataset.

Whether the newest timestamp wins, or whether one data provider is prioritised depends on your operational needs. Without predefined conflict rules, the merged dataset becomes a patchwork of contradictions rather than a harmonised, reliable output.

⧐ Validation After Merging

Even after a careful merge, validation is essential. Automated tools often miss subtle inconsistencies, so manual sampling, count comparisons, and field-level checks ensure accuracy. This is the phase where you confirm that the dataset hasn’t lost important fields, that the number of records aligns with expectations, and that the deduplication logic worked correctly.

A well-validated merge protects downstream processes like reporting, segmentation, and forecasting from hidden data flaws.

⧐ Documentation and Governance

↪ US companies lose up to 40% of operational efficiency due to poor documentation, according to industry process-management studies.

↪ Teams without written data procedures experience 30–40% higher rework time, as reported by multiple workflow audits.

↪ Organisations with formal data governance reduce data-quality issues by nearly 30%, based on findings shared in enterprise analytics reports.

Documenting your merging rules, transformation steps, and conflict-resolution decisions creates transparency, ensures repeatability, and strengthens long-term data governance.

How to do data merging without any mistakes

Merging is a methodical process of combining different datasets into one platform. Be careful when you consider merging different datasets. You need to take the right steps, and that too with calculated measures, to ensure no error happens through the process. We have added a step-by-step process on how you can merge your database without any mistakes in the later section.

◈ Start with data profiling

Data merging starts with data profiling. Once the data gets profiled, you can easily initiate the merging process. What data profiling does is make your database open to interpretation. Each record gets structurally placed within the database without any inconsistencies. Data profiling helps discover issues like inconsistencies, inaccuracy, and missing values.

As data profiling analyzes data at multiple levels, it brings out the best picture of the entire dataset. Individual columns and their relationships with each other are exposed via data profiling. More than that, data profiling makes the entire database transparent, so you could easily merge it with another database.

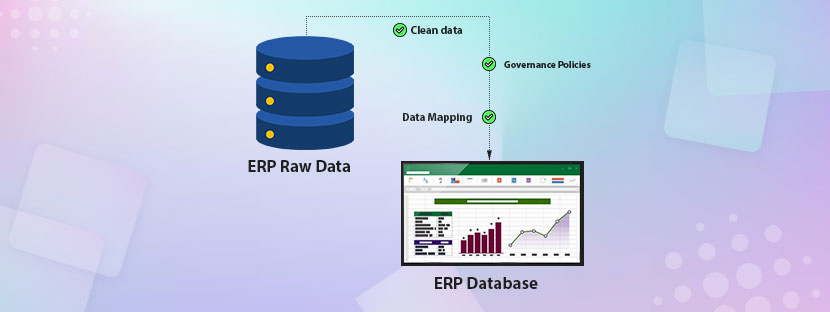

◈ Cleansing your data

Raw data is not ready for merging. Clean and standardized data always get prioritized to initiate the merging process. Before you merge your databases, you need to make sure that you’ve taken the right path. Removing old data, obsolete records, and incorrect formatting issues from the existing database makes the database ready to be merged. Before merging the database, you need to ensure that you have standardized the data really well.

◈ Choosing the right merging tools

Not everything gets merged in the same way. Different types of data need different ways to feed and prepare for merging. Approval on appending rows, merging columns, and conditional merges should be done based on your needs and requirements.

Therefore, evaluation of your data is important before you take any steps to merge your databases. One thing you must ensure is that the information you keep in the database must be accurate and complete in all respects. You cannot perform merging with inaccurate and dirty data. To make everything run in sync, you need to get the right tools to merge your datasets.

Check one thing: your chosen tool should keep all information accurate and complete every time.

◈ Test your merge process

Like a mock test is everything, you need to test your merging process every time before you move further. It’s recommended that you test the merging process on a small scale. You can have a detailed idea about the process once you test it out. Therefore, large corporations always prefer to run a test to check everything.

Testing before installing any tools or technology for merging databases is always preferable. This could spot any issues at an early stage and refine your approach further. Testing is a simple stage, but it can prevent big mistakes later on.

◈ Validate everything

Performing validation is the end process, but it should never be neglected at all. Validate your data after completing the merging process. Sometimes data merging can put your data into a risky phase, which you need to come out of fast. Applying the rules of data validation can fix up things easily. All you need to make sure that your merged data meets the expectations and is perfect for use. Validate it to check accuracy, consistency, and completeness.

Data survivorship & creating golden records

Merge and purge help you identify and remove duplicate entries with effective measures. Not only do removing duplicates, merging, and purging ensure you have a clean database. But there is one problem. How could you know which data to remove and which to keep?

Suppose you have a database with three record entries in it. Each record shows an address. That means you have 3 addresses in that database. Now you have to pick the right address from those three addresses. How would you choose the right address?

Difficult. But when you have set an “intelligent” rule, it becomes easy.

Data survivorship is the core element you need to work on for this. It’s the process of identifying and merging the best attributes from your duplicate records. It helps create a single and true version of records for an entity. I.e., golden records