In This Article

Imagine you’re an employee of a prestigious organization. You deal with the sales class, which is kind of frustrating, but now you kinda enjoy it. The only thing you don’t like is the behavior of your immediate senior manager. He’s been quite rough to you.

Anyway, you possess some good qualities, and that’s why you don’t speak up about these issues to HR. Well, the intelligent HR team at your company got to know about this from other employees. And the team has decided to conduct an anonymous survey to reveal “employee satisfaction level.”

I believe you guessed what happened the next…

Yes, the behavioral issues of your manager got exposed. While you did not speak directly to anyone. The only thing you did was answer the survey honestly.

Now think about it. Something you cannot express socially, but when there is no trace of who expresses what, you feel free to express. In literal terms, that incident is called “responsive bias”. It’s a popular and yet problematic element in surveys.

Let’s speak more about responsive bias in this blog. Alright?

What does responsive bias mean

Surveys provide usable data to businesses. Naturally, the responses that the survey will collect should be honest and free from bias.

But it happens in rare cases. Responsive bias mostly comes with responses.

Responsive bias refers to the conditions or factors that influence survey responses. There are many reasons why responsive biases happen. Sometimes it’s a desire to comply with social desirability when respondents answer, thinking that they ‘should’ answer ideally. Basically, you cannot get accurate or honest answers from your respondents.

Dishonest answers don’t represent the true view of your respondents. It represents inaccurate data that skews your survey results.

For example, if a respondent was asked how often they consume alcohol and provided with options like ‘frequently’, ‘sometimes’, or ‘infrequently’. The respondents were most likely to choose sometimes or infrequently. Even if they regularly consume alcohol. This is the most classic example of a dishonest answer. This will not bring accuracy into the survey analysis.

All you need to remove response bias from your survey responses. Addressing the patterns of response bias is the best way to deal with them.

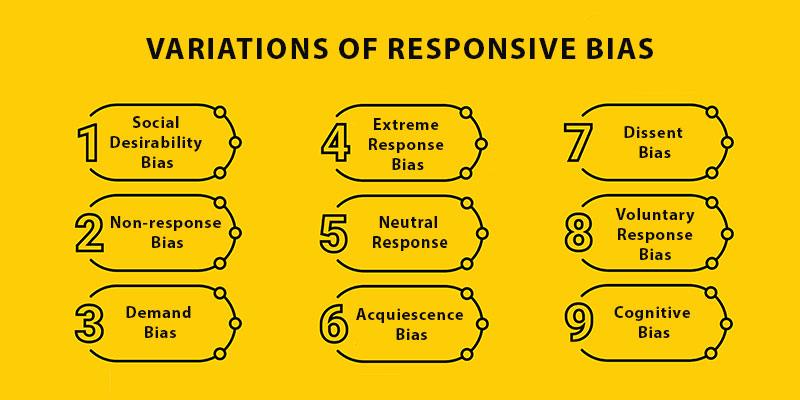

Variations of responsive bias

Dishonest survey responses are basically the product of response bias. They do not indicate the whole thing of response bias at all. There are several kinds of response bias that exist; if left undermined, it can ruin your survey analysis.

The following section takes responsibility for explaining to you what type of response bias you can interact with later.

Social desirability bias

Whenever sensitive questions are asked, respondents tend to show social desirability bias. Rather than answering what is true, respondents choose to answer what is socially desirable or more acceptable. Answering a good response instead of answering an honest answer produces social desirability bias.

There are many such topics where respondents will show social desirability bias. It will come when you ask them about their personal income, alcohol consumption, religion, patriotism, health, etc.

Not only the responses, but the questions are equally responsible for triggering social desirability bias.

People do not want to sound wrong or socially awkward. Saying ‘yes’ to the previous question will never come naturally. If not come, then it should be treated as a social desirability bias.

When conducting surveys on these sensitive topics, focus intensively on your questions. Write inclusive questions to get bias-free responses.

Non-response bias

When one part of your selected sample audience does not participate in the survey process, it creates non-response bias. If one segment of your participants does not respond to your survey, then the results would not reflect the entire thing.

Non-response bias is quite tricky for the researchers because the outcome does not present the complete picture. However, the experiences are the same for everyone; those who responded and those who did not respond.

🔍 Suppose you’re researching to understand the impact of wrinkle-free face cream among a wide female population. The response you’ll collect would vary from population to population. And some of the respondents may not be eager to give you a response at even.

Ultimately, you’ll end up overreporting to one side audience only, based on your sample. The major problem of this survey is that you cannot make an accurate assumption about the decision of your survey samples.

Demand bias

Among other biases, demand bias is peculiar as well as more interesting. It happens when your participants change their views after knowing the research agenda.

Basically, your participants take clues about your research from the research hypothesis. They may get the topic ideas from;

As a result, participants who are aware of the research provide more concrete answers than the participants who are unaware. Awareness makes them conscious, and they respond clearly.

Suppose there is a campaign in high school that you have organized. The topic was STD transmission and prevention. Students who could guess the research questions have come prepared and answer whatever they feel like, without shame or fear. On the other hand, students who could not guess but participated in the survey responded only to socially acceptable options. It formed the demand bias.

Sometimes, revealing the study of the research can prevent demand bias. Honest answers can be encouraged while socially approved answers are bypassed.

Extreme response bias

As the name suggests, response bias indicates an extreme view on any topic or subject.

Extreme responses are mostly what you observe in surveys where ratings are asked to define feelings or any emotion. The respondents tend to provide an extreme stance on various matters.

🗪 “On a rate of 1 to 10, how much you’d like to give your current organization where you work?” If a researcher asks you this question, what number would you give? Remember, you need to make an extreme stance on this.

If you score 1 or 2, or 10 even, your answer would come under an extreme answer.

It’s a fact that nobody is happy at their workplace, but that does not mean you’d rate it 1 or 2 🫣. That reflects extreme dissatisfaction. When you reflect on 10, that seems very sarcastic. Nobody except for the founder is completely happy in the workplace.

And it’s quite obvious, researchers will meet respondents who might strongly disagree on various things. Interestingly, they’ll do that even if they have no strong feeling about the topic.

Whenever you realize you’ve counted extreme responses, take them down from your considerations. Do not consider any strong argument in your survey response at any time. It will screw your responses.

Neutral response

Having a neutral view is always preferable. It provides a balanced picture of everything, but it is not helpful when it comes to survey assessment. Survey demands for raw data, which is unfiltered and unbiased. If respondents take a diplomatic stance, then it will become very hard to assess the survey results.

It mostly happens when participants choose to give only a neutral response.

Suppose you conducted a survey on 10 people, and everyone chose the option ‘neutral’ for all the questions you asked. Hope you guessed the answer. Your survey becomes flat. Because if everyone selects the neutral option, then the end results will also become the average of neutral, which is “neutral”.

Having a different perspective or a difference in opinion helps surveys to proceed further. It provides an inclusive insight into your survey results. Thus, always make sure your respondents feel free to explore different options to respond.

Acquiescence bias

When your respondents simply choose not to respond in a socially acceptable way. They choose to agree on what the research statement states.

Acquiescence bias prevents respondents from answering their own opinion during a survey. Basically, the respondents think about how the researcher would react if they came up with a different type of opinion. Rather than stating their own view, they chose to follow the researcher.

For example, if researchers ask their sample respondents about their views on certain political events. The rational respondents will be more likely to stay neutral or favor the researcher’s perspective even if they don’t agree with that view. In this case, the respondents do not want to expose their political views to the researcher. They basically do not want to be judged because of their views.

Acquiescence bias will not enter into the research if you ask better questions. Questions that are extreme in nature, like politics, religion, etc, should not count while you conduct a general survey to avoid this bias.

Dissent bias

Many a time it happens when we haven’t studied the survey questions properly, but respond to them.

It’s a classic case of dissent bias.

Here’s a common pattern you can observe that can help you detect this bias. The most prominent one is “the respondent has marked all the answers the same”. For example, he clicked “strongly agree” or “strongly disagree” for all the questions. He has not even read the question, just quickly answered it to get the rewards.

Dissent bias can ruin survey insights completely. You’ll end up having useless responses that you cannot push further for assessment. Spending money on these useless stunts is a waste of time. You need to prevent bias against dissent at any cost. Improving the questions can help to a certain extent.

Voluntary response bias

Researchers should always consider taking up samples from different population groups. It will reflect the inclusive nature of the survey response. However, if the researcher allows voluntary participants to respond to the survey, it will produce response bias.

Voluntary participants will never replace the actual sample. If researchers include all responses that have been given by the voluntary participants, the survey results will not reflect the true picture.

Overreporting is a big issue, and you cannot allow it in your surveys. Allowing voluntary participants in your survey process will increase the chance of overreporting. It becomes hard to generate accurate results if you overreport anything while undermining others.

🚧 Avoid voluntary response bias if you want your research to bring a great result. You need to avoid everything that comes as an obstacle to your survey results.

Cognitive bias

So, during the exit point, you asked that customer to leave a message for the company.

👀 Guess what happened?

➖ The customer wrote a negative review about your company. Even though you offered something different.

It forms cognitive bias. A person who has had a poor experience will never give positive feedback. It happens because of their cognitive bias. If we change the event sequence or nature, cognitive bias remains the same every time.

🌟 On a rate of 1 to 10, how much will you give based on the recent experience you got from your close friend’s restaurant? Maybe 9 or 10. If so, you’re applying your cognitive bias here.

Allover, a respondent bias should never come your way when you collect survey responses. It will screw your results. You’ll end up having worse responses even after spending so much on survey data collection. Therefore, it’s always required for you to understand what type of bias you can interact with while conducting surveys. Hope this section helps. The following section will graph how these biases get formed and how you can tackle them.

What causes responsive bias

Bias doesn’t happen naturally. There are various reasons behind the occurrence of bias. Let’s address some common problems why bias happens in this segment in detail.

▶ Faulty survey designs

Surveys should be simple. That’s their main concern of the hour. If respondents feel stressed about the survey, it would impact the response quality. They may feel not responding to your questions.

There can be two reasons behind this;

🔺 Questions are difficult

🔺 Design is not intuitive

Asking tough questions is equal to asking your audience to leave the survey page. Honestly speaking, people cannot stay attentive for more 8 seconds. Attention span is limited now. So, asking people to complete your survey, and that too with complex questions, is not the right way.

On the other hand, if your survey design is intuitive, it will create blockages. Your respondents will be stuck in the middle of their surveys. Ultimately, they’ll bounce and skip responding to your questions.

▶ Complex survey methodology

If you want to collect socially acceptable answers from your surveys, you can go for a face-to-face survey or phone calls. But if you want something more authentic, you need to take a deeper route to collect your responses. Survey methodology comes into play at this time to provide you with this opportunity.

Anonymous surveys or online surveys are best if you want to collect some genuine information from your participants. People tend to feel more secure when answering anonymous surveys.

▶ Respondent-driven results

Surveys work differently for individuals. Social stigma, desire to please the researcher, not recognizing own patterns, etc, are part of the survey process. If these things come into the picture, it will create a survey response-driven.